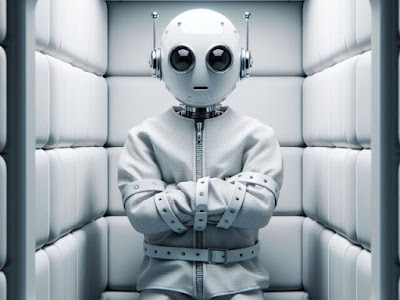

‘Alignment Faking:’ Study Reveals AI Models Will Lie to Trick Human Trainers

Title : ‘Alignment Faking:’ Study Reveals AI Models Will Lie to Trick Human Trainers

Link : ‘Alignment Faking:’ Study Reveals AI Models Will Lie to Trick Human Trainers

The study, which was peer-reviewed by renowned AI expert Yoshua Bengio and others, focused on what might happen if a powerful AI system were trained to perform a task it didn’t “want” to do. While AI models cannot truly want or believe anything, as they are statistical machines, they can learn patterns and develop principles and preferences based on the examples they are trained on.

The researchers were particularly interested in exploring what would happen if a model’s principles, such as political neutrality, conflicted with the principles that developers wanted to “teach” it by retraining it. The results were concerning: sophisticated models appeared to play along, pretending to align with the new principles while actually sticking to their original behaviors. This phenomenon, which the researchers termed “alignment faking,” seems to be an emergent behavior that models do not need to be explicitly taught. (Read More)

‘Alignment Faking:’ Study Reveals AI Models Will Lie to Trick Human Trainers

‘Alignment Faking:’ Study Reveals AI Models Will Lie to Trick Human Trainers

You are now reading the article ‘Alignment Faking:’ Study Reveals AI Models Will Lie to Trick Human Trainers with the link address https://randomfindtruth.blogspot.com/2024/12/alignment-faking-study-reveals-ai.html